This project was a collection of robotics algorithm demonstrations. I specifically worked on:

- Implementing Particle Filter Localization

- Implementing RRT* Path Planning

- CV finding lines

- Organization & Architecture

We created a somewhat more formal writeup of these projects here. Our code can be found here.

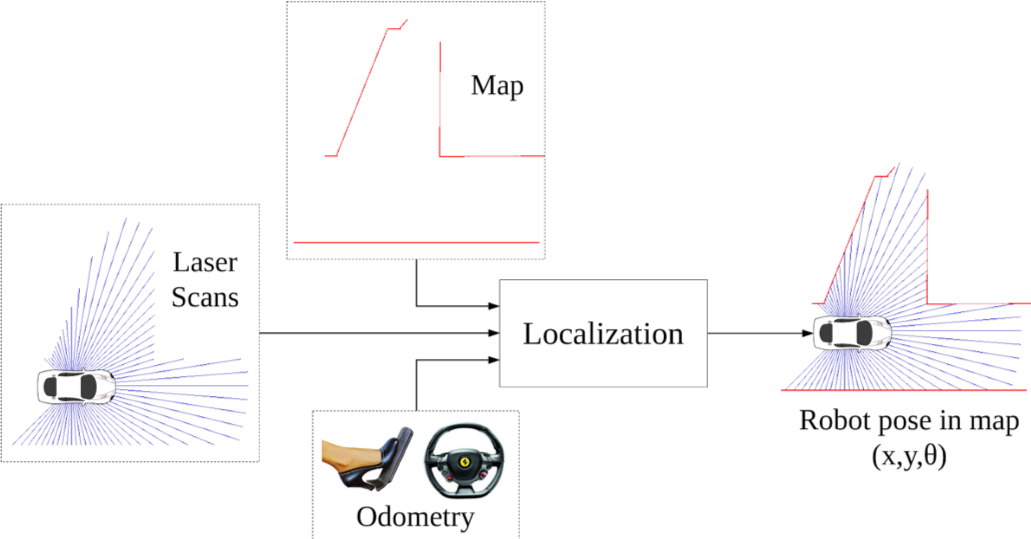

Localization

I wrote a particle filter localization algorithm. The robot keeps a list of possible places it could be (particles) and at each time step compares its current sensor data to what it would see if it were at each of those positions. Whichever matches best is used as the position. Note that this method requires a map. The video shows the robot localizing itself in real time.

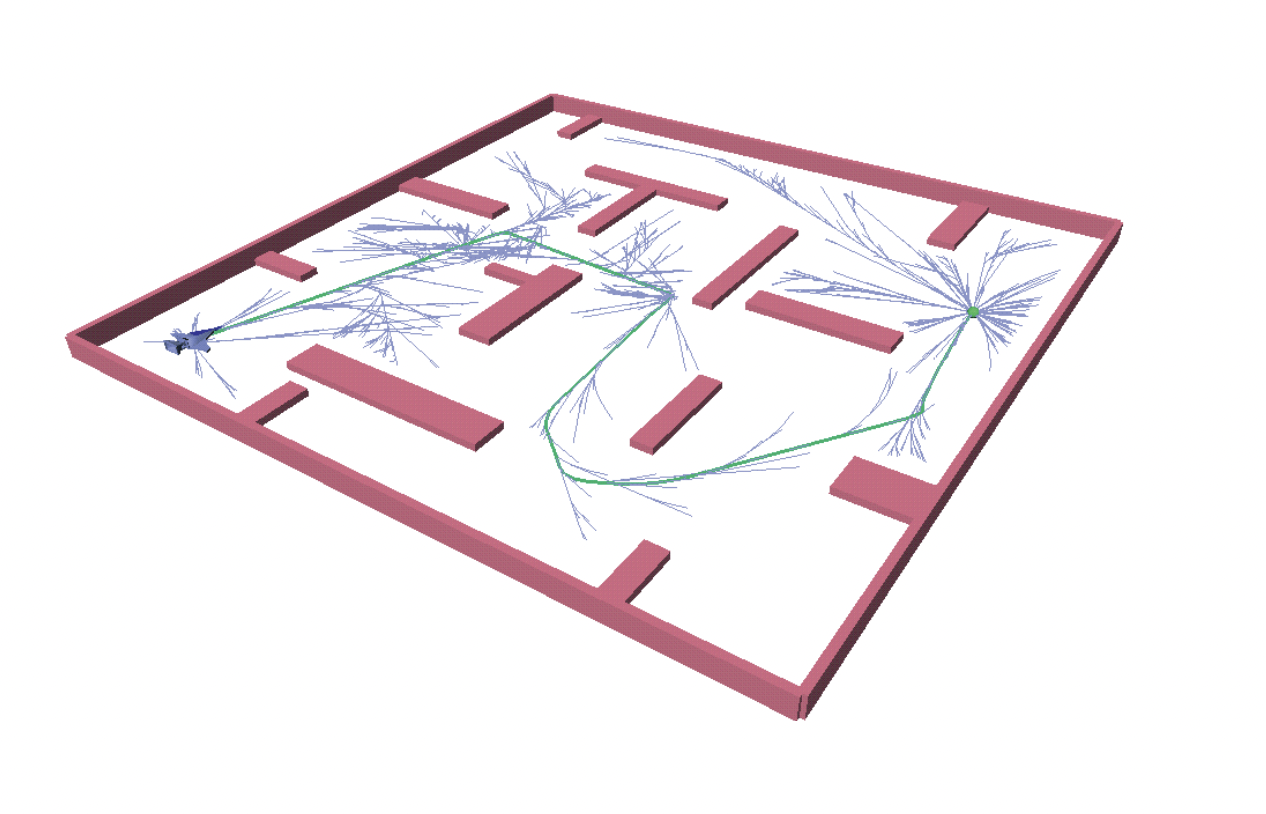

RRT Pathfinding

RRT* is a path planning algorithm. It differs notably from other graph search algorithms like A* in that is uses randomness, and thus does not have to search the entire map. This is much faster, at the cost of less optimal paths. The gif shows a Rapidly Exploring Random Tree (RRT), and you can see how at every iteration the random paths reach more and more white space, but that the paths are not straightforward. The piece that makes it useful is to, for every new node, “snap” to a different neighbor if the resultant path is better. This leads to paths that tend to radiate out from the starting position, instead of spiraling.

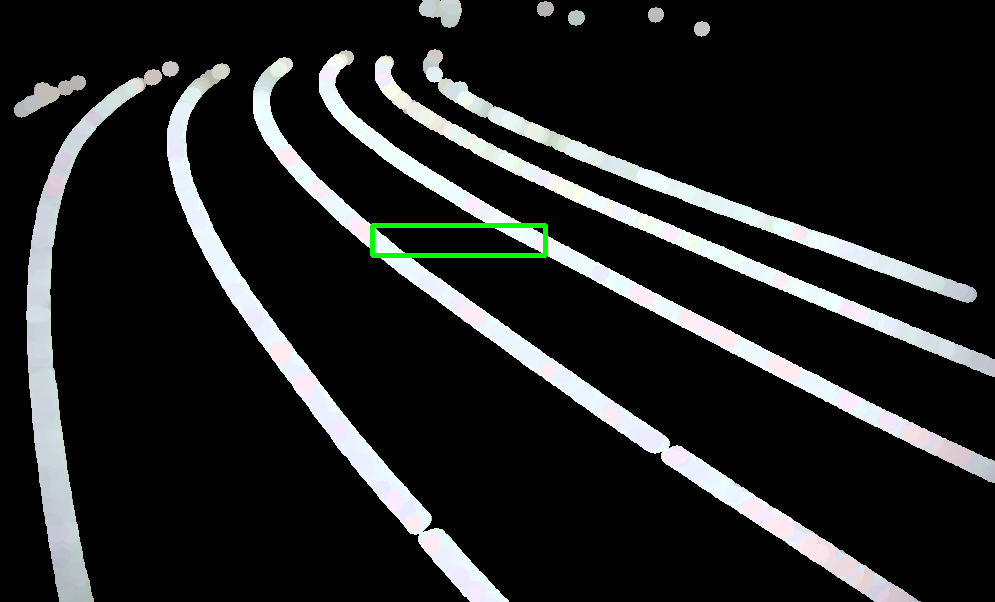

CV Lines

For the bot’s final task, we had to navigate a racetrack and, importantly, stay in our lane. I did the CV for this step. The first thing I tried was to isolate all of the line segments openCV’s houghlines algorithm could detect in the image, and correlate them together to identify curves. This was horrendously expensive. The second approach was to scale way down, and just use color thresholding, then assume that whatever lanes are near the center of the image are correct. The second screenshot shows how on curves, this approach can move us into the adjacent lane.

Conveniently, it turned out that the robot’s POV was such that we never risked running into the adjacent lane. The last thing I had to do then was add a filter to reject the horizontal and other miscellaneous lines (you can see a few in the videos) and add some math to pick the “drive point.” We could then navigate to that with Pure Pursuit and off we go!

Controls

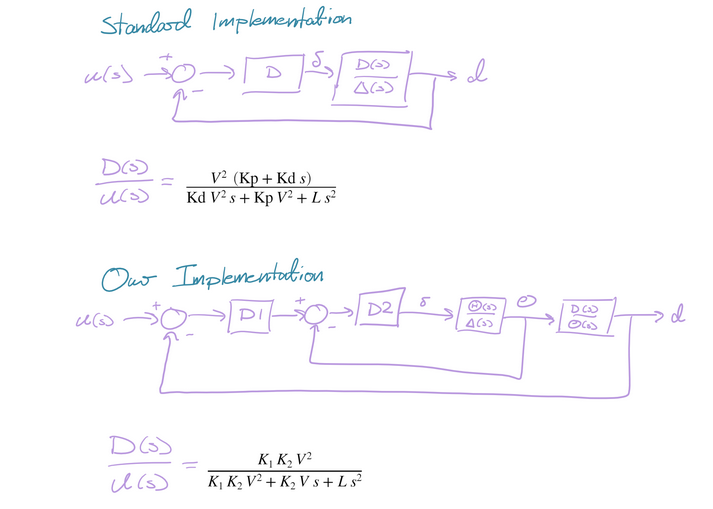

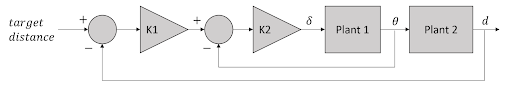

I used SISO control theory for a wall following program. We used lidar to find the nearby wall, and used feedback control to maneuver along it. The gain on the real bot was unfortunately a bit too high, hence the visible oscillation.

Website

For more of Dizzy the Robot, check out our website and lab reports!